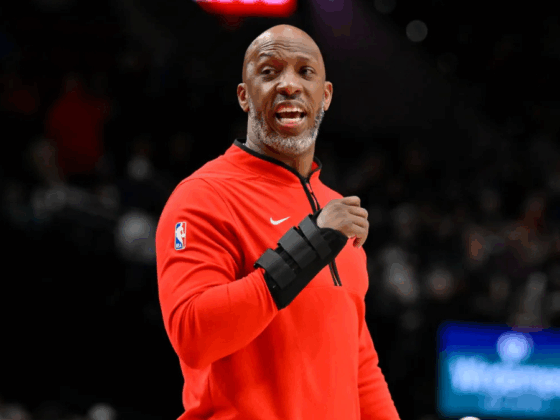

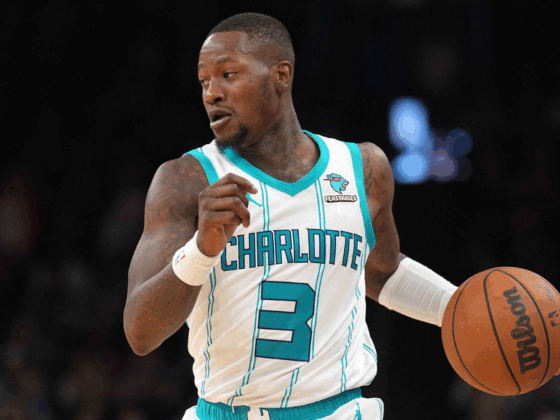

A few weeks ago on January 20th, the New Orleans Pelicans surrendered an unlikely 143 points to the league-worst Brooklyn Nets. Astonishingly, the Pelicans were in the top seven in defensive rating in the NBA before this game; therefore, the style of loss they incurred was even more improbable. Although any NBA team can defeat another on any given night, no one would expect the league-worst Nets to accomplish this feat!

Final in New Orleans. pic.twitter.com/Zw7B3vPB64

— New Orleans Pelicans (@PelicansNBA) January 21, 2017

Because of my astonishment, I wanted to find the probability that the New Orleans Pelicans would surrender Brooklyn Nets 143 points to the Nets on their home court. To do this, I decided on creating a binary logistic regression model that used team’s offensive ratings and defensive ratings as continuous, independent variables for the modeling process.

Section I – Finding Probability of Nets’ Scoring Outburst: Creating The Model

Note: If you prefer to pass over the analytical context, you can go to sections 3 & 4 to check the probability of the Pelicans surrendering 135+ points to the Nets and the Shiny app that allows you to input your values to find the probability of a hypothetical Team A surrendering 135+ to hypothetical Team B.

I took 639 observations from Basketball Reference’s game logs. Every single game from the 2016-17 season through January 19th was included. I also took the Offensive and Defensive ratings for each team as of January 20th, and the pace statistic (to identify the amount of possessions per 48 minutes) as of January 24th (b/c it was a late addition/nuance to the model to create more of an accurate representation of the probabilities of a high scoring matchup, +/- a few percentage points).

Why use midseason cumulative values rather than the pace statistic and off/def ratings of each team before each game that it played?

A portion of the 639 observations is from early-season matches. For example, the defensive of a team on January 19th would probably not be the same value as the value would be for a November 21st matchup.

Shouldn’t we represent the offensive efficiency, defensive efficiency and pace of every statistic with respect to the date of the game?

This is certainly a fair critique. However, there are two main reasons for applying midseason values & confiding in the responses they yield:

- Availability – it would be impractical to compile every value that represented all 600+ observations. This was probably the most salient reason.

- The increased sample size (of the ORtg, DRtg, and pace statistic) mitigates the effects that outliers have on the observation pool (and ultimately the model diagnostics). After more games, we have a better barometer of the aptitude of each team. In 82 games, most NBA fans and pundits would argue that we can evaluate a team’s performance comprehensively; whereas during a 3-4 game stretch, our evaluations are subject to more noise and are less likely to be indicative of a team’s true capability.

For example, the Atlanta Hawks were nearly world-beaters at the season’s beginning, and then promptly endured a precipitous slide. Offensive Ratings, defensive ratings, and pace can endure significant variability from the strength of schedule, injuries, etc. So, the more games, the better our representation.

Section II – Independent and Dependent Variables for Logistic Regression Model

So, to compile a model that could explain the likelihood of this Nets/Pelicans occurrence, I considered the following variables for each event:

- As a binary dependent variable, “Surrender135” which represents whether a team surrenders more than 134 points (or at least 135 points) per game.

*I initially tried to choose 143 or more to find the probability of the Nets performing the exact scenario, but it never amounted to a significant model with the observation pool.

Independent Variables:

- Team A Offensive Rating (as of Jan 19th)

- Team A Defensive Rating (as of Jan 19th)

- Team B Offensive Rating (as of Jan 19th)

- Team B Defensive Rating (as of Jan 19th)

- Whether or not there was OT (categorical variable of 1 or 0)

- Homecourt (categorical- 1 or 0, Yes/No)

- Combined Pace (Pace of Team A plus Team B)

In this circumstance, Team A is the one in question: Did Team A surrender the 135+?

Team B is the opponent.

After testing the logistic regression and removing homecourt (because I performed a log-likelihood ratio test that demonstrates how a model without homecourt advantage is significantly better for predictive measurements, given the Chi-Squared value), the process had variables of “AOffRtg”, “ADefRtg”, “BOffRtg”, “BDefRtg”, “OT”, & “Combined Pace”.

Other diagnostics:

- Because the residual deviance was less than the null deviance, we have reason to believe that our new model has solid explanatory power. The variables were not ridge regressed, however.

- also, I did a performance test on the newfound model, finding the Area Under the Curve (AUC). The AUC of the model was .95. Models with AUCs closer to one are preferred over lower AUCs.

To understand whether or not the odds at surrendering 135+ increase or decrease when isolating each variable by itself, let’s check the exponential of each coefficient, the odds ratio of each coefficient, in the model:

- (Intercept): 2.357857e-28

- AOffRtg: .783

- ADefRtg: 1.428 – The chances of surrendering 135 or more increase 42.8% at any increase of Team A’s defensive rating, which makes sense.

- BOffRtg: 1.318

- BDefRtg: .7988

- OT: 15.461 – The presence of Overtime greatly increases the chances that Team A surrenders 135+! For simplicity’s sake, I did not differentiate between OT, 2OT & 3OT in the observation pool.

- Combined Pace: 1.223 – It’s why you cannot use PPG as a comparative league-wide measurement.

Section III – Predicted Probability of Pelicans Surrendering 135+ to the Nets

So how probable was it for the New Orleans Pelicans’ highly ranked defense to surrender 135 or more points to the lowly Brooklyn Nets?

As of January 20th, the values were as follows:

- Team A (Pelicans) Off Rtg: 103.4

- Team A Def Rtg: 106.4

- Team B (Nets) Off Rtg: 103.7

- Team B Def Rtg: 112.2

- OT: 0

- Combined Pace: 199.9

The probability that the Pelicans gave up more than 134 points to the Nets was .07036 percent. Therefore, according to the model, the Nets had seven successful chances at this feat out of 10,000— and still scored even more. The probability of scoring 143 would be even lower than this!

Among the retroactively predicted probability values of games in the observation pool, there was a maximum chance of 66.73% that Team A surrendered 135 or more points to Team B, this came a Nets/Warriors matchup on December 22nd– of course. (Despite the probability, the Nets didn’t allow the Warriors to eclipse that mark. Brooklyn locked up and only gave up 117 points to the Warriors in that home game.)

The probability that the Los Angeles Lakers would surrender 135+ to the Golden State Warriors on November 23rd was 31.16%. This scoring barrage did, in fact, take place.

The probability that the Brooklyn Nets surrendered 135+ to the Houston Rockets on January 15th was 25.37%; this happened as well.

Overall, the average situation would render a mean probability value of .94%. In other words, the majority of the circumstances that our model involved “Surrendering 135” saw probabilities that were around 94 successful attempts (surrenders) per 10,000 total attempts.

Let’s get to the greatest part.

Section IV – R Shiny Predictive Model!

After finalizing the model, I chose to create a Shiny web app which I executed with the help of @theorthographer. Using the slider inputs in the sidebar panel, you can find the probability of any team A surrendering 135+ points to any team B.

You can find an approximate percentage chance for any current NBA team given its current offensive rating, defensive rating, and pace statistic.

You can even use it to predict any hypothetical scenario that you please.

Have fun with it. While gathering more observations to reduce the error involved in this model, the explanatory power and applicability of a logistic regression model like this will create some more probabilistic projects.

Don’t forget that you can click on the whitespace in the app to scroll up and down within it. If, for any reason, the slider inputs haven’t loaded or the probability % hasn’t shown, then just refresh the page again.

Probability should be in green

.